Overview

The GLM Coding Plan is a subscription package designed specifically for AI-powered coding. GLM-4.6 is now available in top coding tools, starting at just $3/month — powering Claude Code, Cline, OpenCode, and more. The package is designed to make coding faster, smarter, and more reliable.

- Longer context window: The context window has been expanded from 128K to 200K tokens, enabling the model to handle more complex agentic tasks.

- Superior coding performance: The model achieves higher scores on code benchmarks and demonstrates better real-world performance in applications such as Claude Code、Cline、Roo Code and Kilo Code, including improvements in generating visually polished front-end pages.

- Advanced reasoning: GLM-4.6 shows a clear improvement in reasoning performance and supports tool use during inference, leading to stronger overall capability.

- More capable agents: GLM-4.6 exhibits stronger performance in tool use and search-based agents, and integrates more effectively within agent frameworks.

- Refined writing: Better aligns with human preferences in style and readability, and performs more naturally in role-playing scenarios.

Input Modalities

Text

Output Modalitie

Text

Context Length

200K

Maximum Output Tokens

128K

Introducting GLM-4.6

1. Comprehensive Evaluation

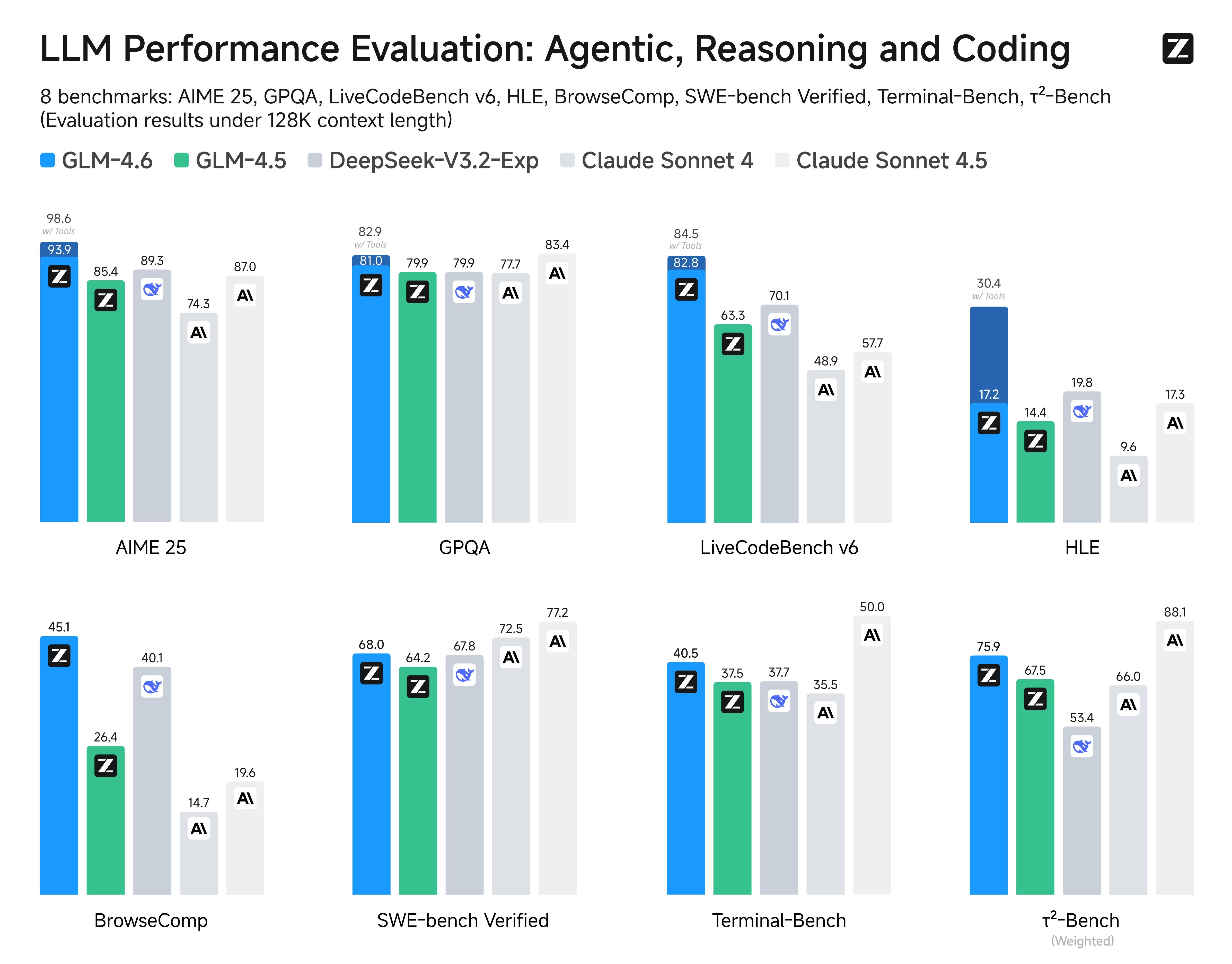

In evaluations across 8 authoritative benchmarks for general model capabilities—including AIME 25, GPQA, LCB v6, HLE, and SWE-Bench Verified—GLM-4.6 achieves performance on par with Claude Sonnet 4/Claude Sonnet 4.6 on several leaderboards, solidifying its position as the top model developed in China.

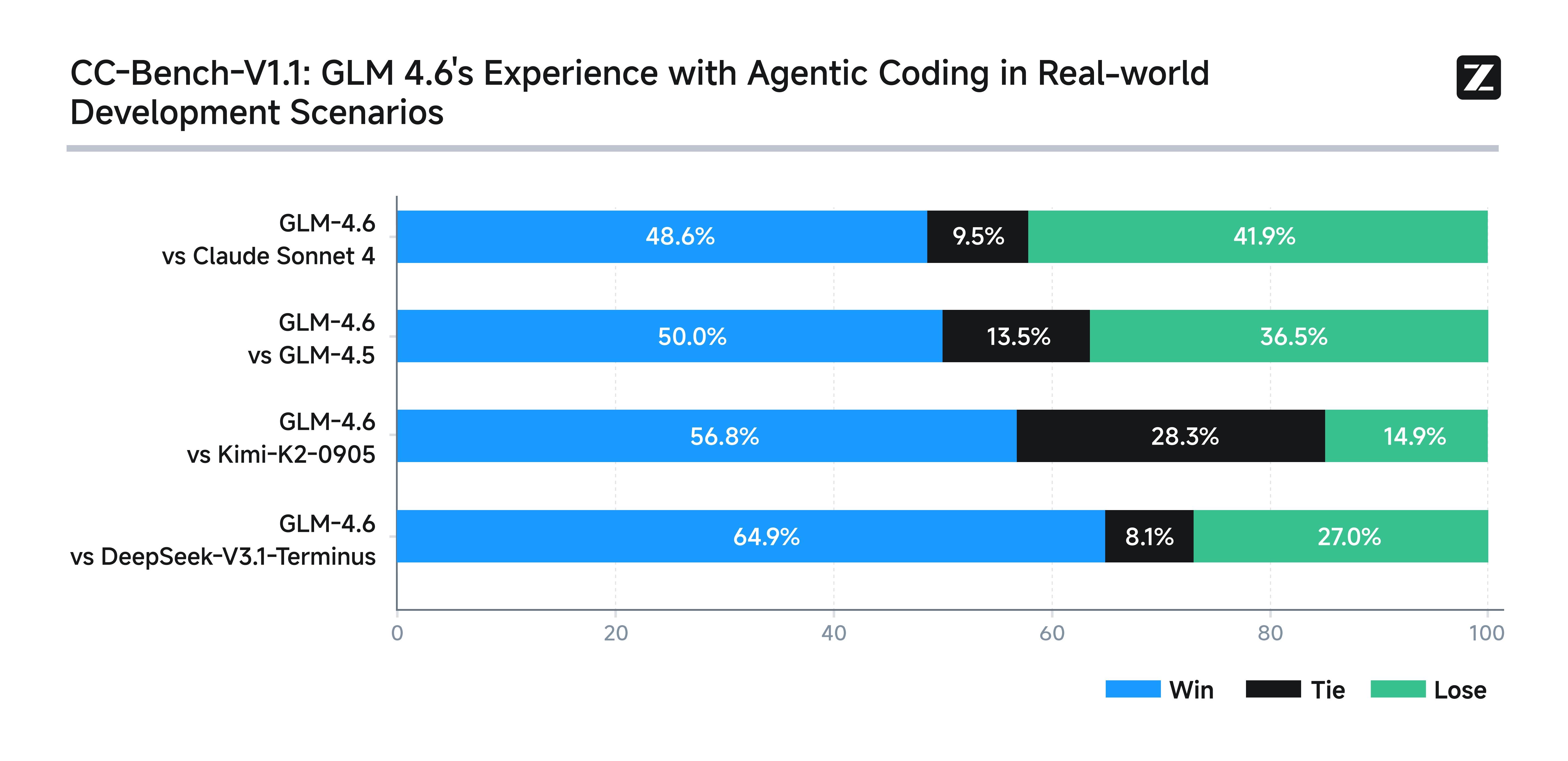

2. Real-World Coding Evaluation

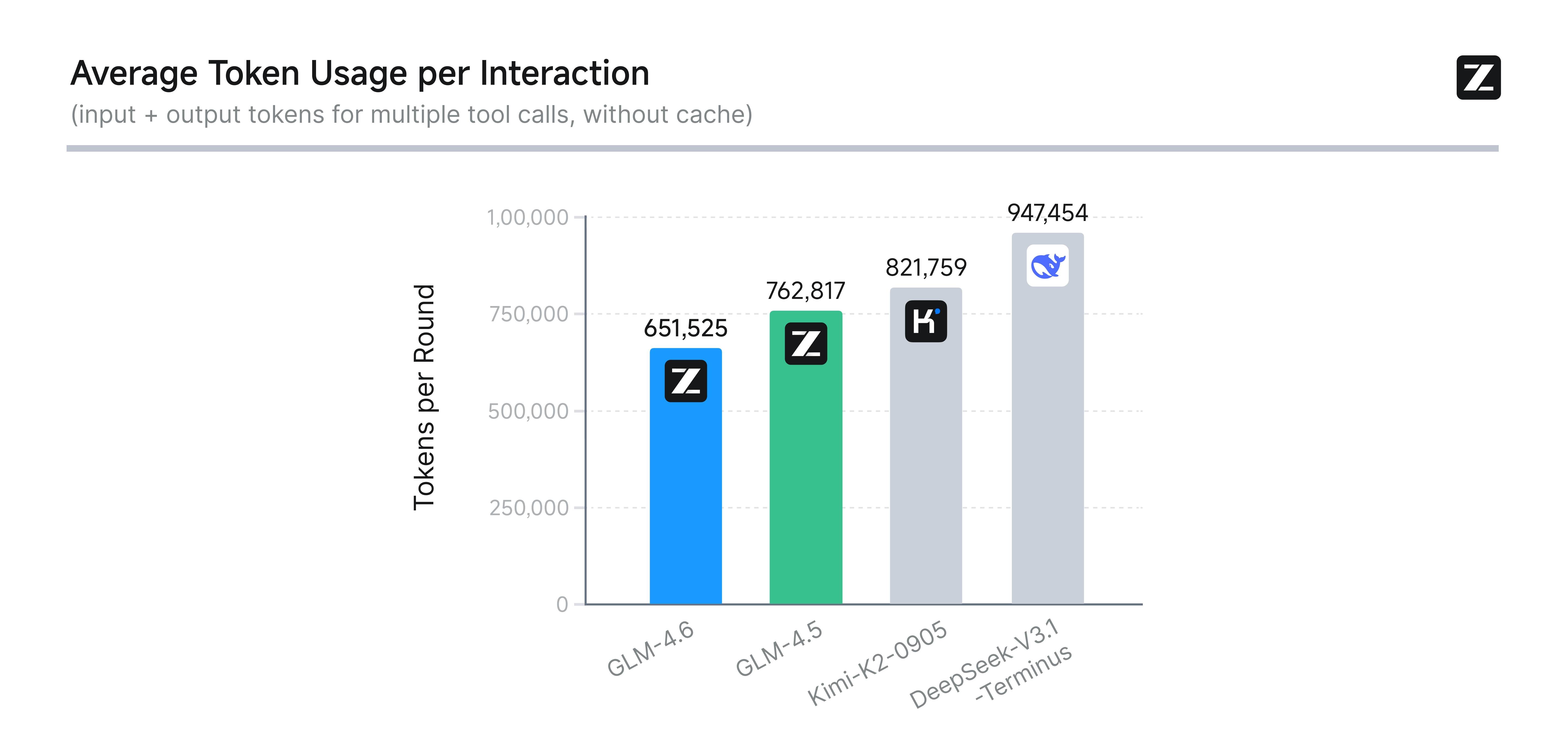

To better test the model’s capabilities in practical coding tasks, we conducted 74 real-world coding tests within the Claude Code environment. The results show that GLM-4.6 surpasses Claude Sonnet 4 and other domestic models in these real-world tests. In terms of average token consumption, GLM-4.6 is over 30% more efficient than GLM-4.6, achieving the lowest consumption rate among comparable models.

In terms of average token consumption, GLM-4.6 is over 30% more efficient than GLM-4.6, achieving the lowest consumption rate among comparable models.

To ensure transparency and credibility, Z.ai has publicly released all test questions and agent trajectories for verification and reproduction.

To ensure transparency and credibility, Z.ai has publicly released all test questions and agent trajectories for verification and reproduction.

Usage

AI Coding

AI Coding

Supports mainstream languages including Python, JavaScript, and Java, delivering superior aesthetics and logical layout in frontend code. Natively handles diverse agent tasks with enhanced autonomous planning and tool invocation capabilities. Excels in task decomposition, cross-tool collaboration, and dynamic adjustments, enabling flexible adaptation to complex development or office workflows.

Smart Office

Smart Office

Significantly enhances presentation quality in PowerPoint creation and office automation scenarios. Generates aesthetically advanced layouts with clear logical structures while preserving content integrity and expression accuracy, making it ideal for office automation systems and AI presentation tools.

Translation and Cross-Language Applications

Translation and Cross-Language Applications

Translation quality for minor languages (French, Russian, Japanese, Korean) and informal contexts has been further optimized, making it particularly suitable for social media, e-commerce content, and short drama translations. It maintains semantic coherence and stylistic consistency in lengthy passages while achieving superior style adaptation and localized expression, meeting the diverse needs of global enterprises and cross-border services.

Content Creation

Content Creation

Supports diverse content production including novels, scripts, and copywriting, achieving more natural expression through contextual expansion and emotional regulation.

Virtual Characters

Virtual Characters

Maintains consistent tone and behavior across multi-turn conversations, ideal for virtual humans, social AI, and brand personification operations, making interactions warmer and more authentic.

Intelligent Search & Deep Research

Intelligent Search & Deep Research

Enhances user intent understanding, tool retrieval, and result integration. Not only does it return more precise search results, but it also deeply synthesizes outcomes to support Deep Research scenarios, delivering more insightful answers to users.

Resources

- API Documentation: Learn how to call the API.

Quick Start

The following is a full sample code to help you onboard GLM-4.6 with ease.Basic CallStreaming Call